The time has come, the Walrus said,

To talk of many things:

Of shoes – and ships – and sealing-wax –

Of cabbages and kings –

Louis Carroll

“The Walrus and the Carpenter”

Introduction

Recently, I have been researching the role of social media and the spread of modern propaganda. One of the things that surprised me, although it probably shouldn’t have, is how rapidly misinformation spreads over the internet as compared to verifiable fact-based information. I choose to use the term verified or verifiable rather than true or truth. It seems to me that we have entered an age where each person decides on their own version of truth. Truth has become relative. It’s based not on verifiable and reproducible facts but rather on who said it, where you read it, or if you agree with it. If it doesn’t fit with your preconceived notions, it can’t possibly be true.

A study by three MIT researchers found that false news spreads more rapidly on the social network Twitter (now X), by a substantial margin, than does factual news. “We found that falsehood diffuses significantly farther, faster, deeper, and more broadly than the truth, in all categories of information, and in many cases by an order of magnitude,” reported Sinan Aral, a professor at the MIT Sloan School of Management.

They found the spread of false information is not due primarily to bots programmed to disseminate inaccurate stories but rather due to people intentionally retweeting inaccurate news items more widely than factual statements.*

To better understand how social media impacts propaganda, it is important to understand how this phenomenon occurs. This leads to two related concepts, Echo Chambers and Filter Bubbles.

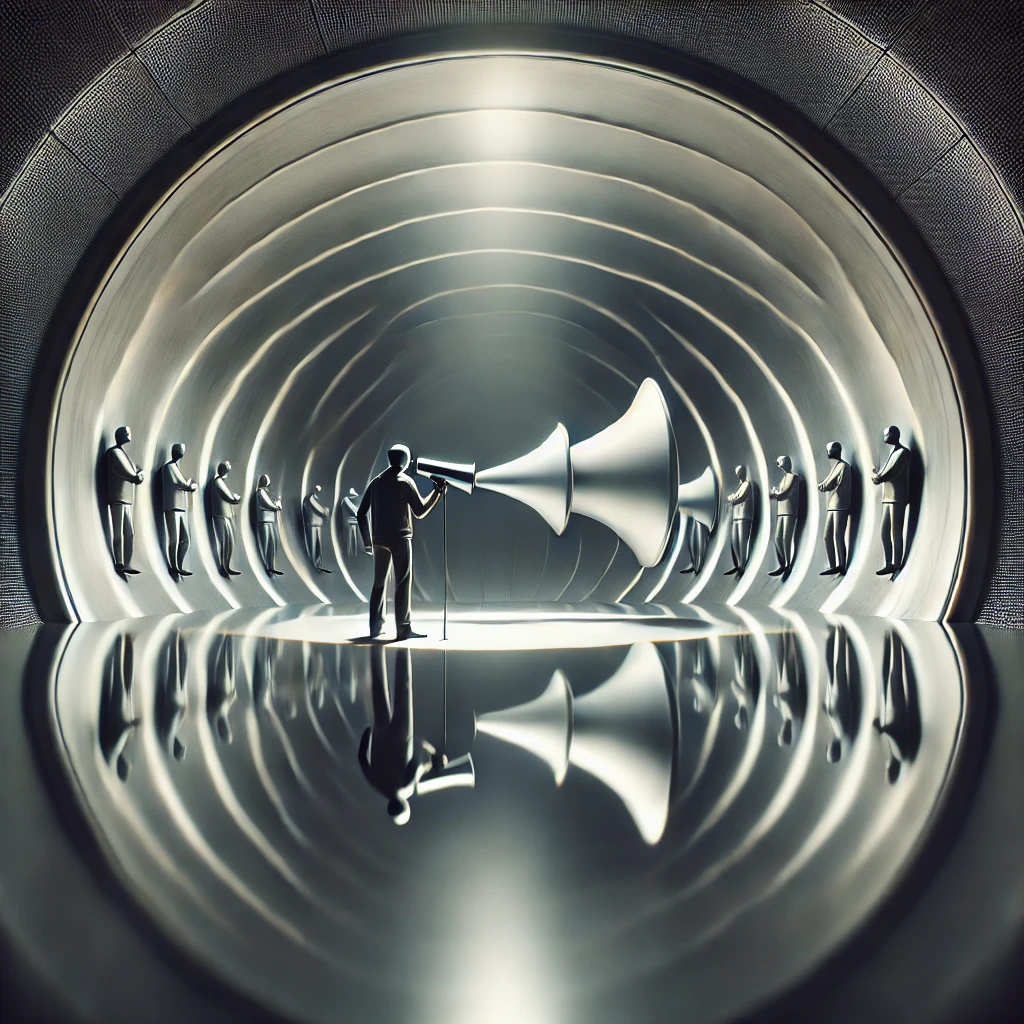

Echo Chambers

An echo chamber is a social structure in which individuals intentionally surrounded themselves only with those who share similar views and opinions. Within these chambers, dissenting opinions are either absent or actively suppressed, leading to the reinforcement of existing beliefs. On social media, echo chambers are created by users themselves, as they choose to follow, like, and share content that aligns with their views while ignoring or blocking contrary perspectives. This self-selection creates a feedback loop where individuals only hear what they already believe, which further entrenches their views.

Filter Bubbles

While echo chambers are largely the result of individual choices, filter bubbles are often shaped by algorithms. Social media platforms and search engines use algorithms to curate content for users based on their past behavior, what they click on, like, or share. The stated intention behind these algorithms is to provide users with content that is most relevant to them. But we shouldn’t overlook that it is also a method to make users more receptive to focused advertising and other forms of influence. The net result is a significant narrowing of the variety of opinions presented.

Individual Choice

Both echo chambers and filter bubbles limit the diversity of information by consistently show users content that aligns with their established interests and beliefs, isolating them from conflicting viewpoints and leading to a narrow and sometimes distorted understanding of the world. Over time, this can result in a skewed perception of reality, where the individual believes that their perspective is universally accepted or unquestionably correct.

While echo chambers and filter bubbles are often discussed in terms of algorithms and platform design, it is crucial to recognize the role of individual decisions in their creation and perpetuation. Each time we choose to follow a particular account, share a piece of content, or engage in a discussion, we are making decisions that shape our information environment.

The choices we make, whether to engage with diverse perspectives or retreat into familiar territory, have a profound impact on the information we encounter and share. When we choose to engage only with like-minded individuals and content, we contribute to the formation of echo chambers. Similarly, when we rely on algorithms to curate our content without seeking out alternative viewpoints, we become trapped in filter bubbles.

Echo chambers and filter bubbles are not exclusive to any political affiliation, religion, ethnicity, or social class. They are found across society. The willing acceptance of echo chambers and filter bubbles is the antithesis of critical thinking. When we fail to recognize that we are not considering alternative views, regardless of our political orientation, we are succumbing to indoctrination rather than education. We become unthinking automatons rather than critical thinking individuals who decide what is best for ourselves. Failure to actively engage with the information that we receive, to ensure that we receive multiple viewpoints (even those with which we disagree) and then failing to critically evaluate everything presented is a sure recipe for a loss of freedom.

*The paper, “The Spread of True and False News Online,” was published in Science, 9 March 2018

Leave a Reply